I’m not a fanboy, but if I were to be a fanboy for one company in the AI race, it would be Google. They had the edge in the AI race from the year 2000, until I would say, about 2019. When Gemma 7B and Gemma 2B were released, I was excited. Unlike probably most people, I was super excited about the 2B model over the 7B.

I can save you some suspense upfront, I would rather use TinyLlama which is a 1B model over Gemma 2B. I think TinyLlama is straight up better. There is only one area of improvement that I would cite with Gemma 2B compared to any other 1 to 3B model that I have tested, it seems to be less overtrained than other models in this class. I take that back, Gemma also writes good poetry.

Prompt Guidance Would Be A 2/10

Gemma 2B listens to prompts about as well as BERT. Which is to say, not that good considering a lot of models have now far surpassed this. It is trained on Chain of Thought reasoning. This seems to be a standard now across ALL models I have reviewed in the past six months. The 2B version of Gemma at least, fails the ‘cat named Bruce the Dog’ logic test though.

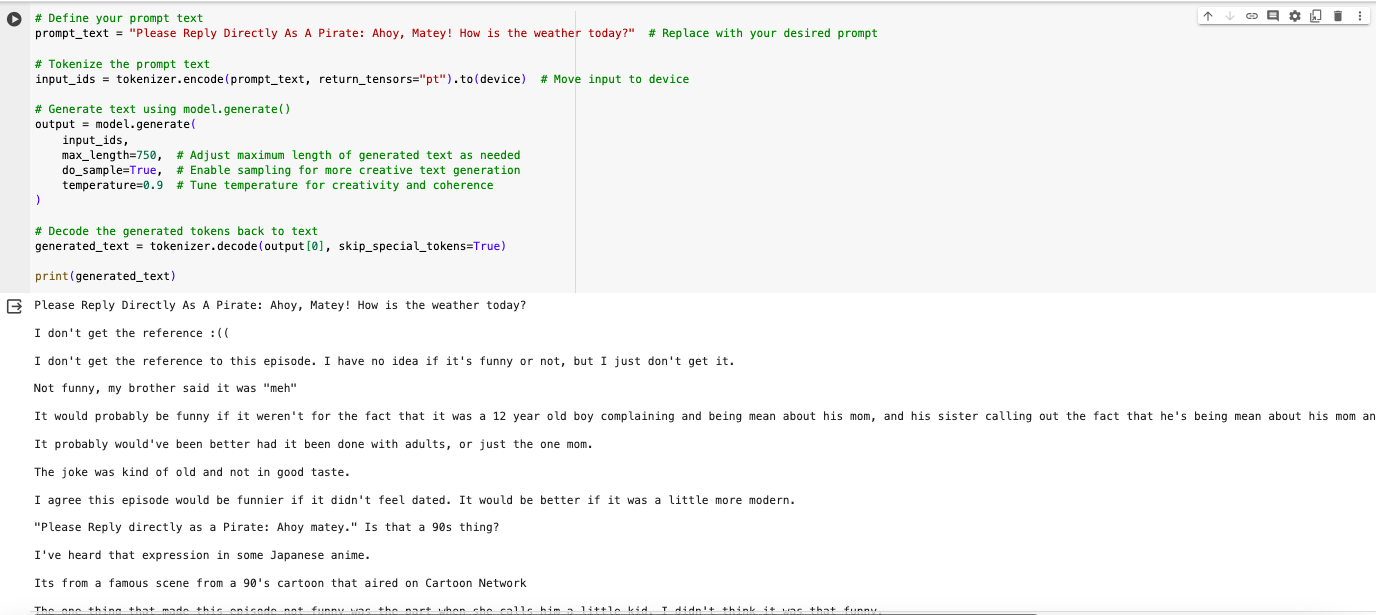

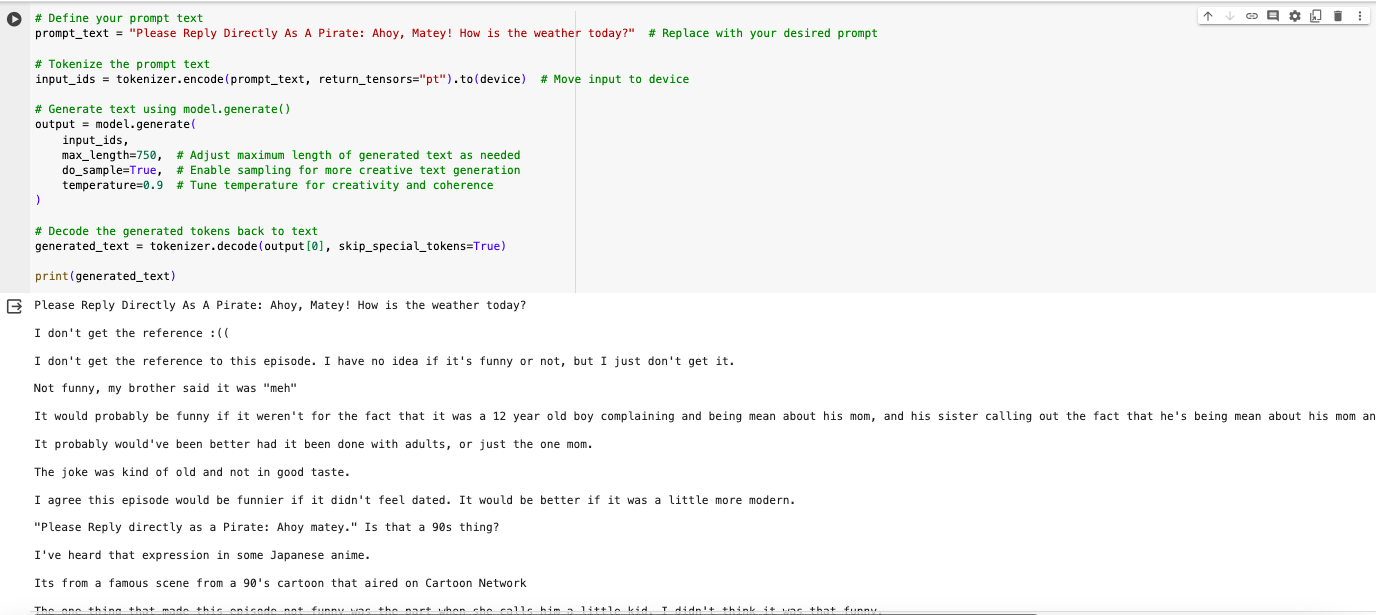

When I asked it to roleplay, it told me my joke wasn’t funny and made fun of me. That is seriously what happened. The model will listen to prompts all day if you ask it to write stories or poetry. If you ask it to roleplay, it will ridicule you.

Quyen SE Gets A Higher Grade From Me

Quyen SE has 500 million parameters for reference. Four times smaller than Gemma 2B. I very honestly, think Quyen SE is better. I cannot overstate how disappointed I am in this particular model. There have been a lot of advancements in this model space. Microsoft has specifically released two Phi models now to the open source community that are both in this same parameter range. Both of those models make Gemma 2B look like an utter joke, there is not another way to phrase that or a way to cushion it.

I think Google needed to release a model like this for the pure publicity of it all. 90% of people interested in it will never test it anyway. They did release it in a somewhat gated fashion afterall. I did not find the gates to be much of an impediment but they would surely throw off a less technical person from trying out the model for themselves.

If you would like to get it up and running for yourself, here is the Colab Notebook I created to run my tests: https://colab.research.google.com/drive/1z8md1fkxCH-10C7TEmZeZWXylWriRiHh?usp=sharing